“ColorVideoVDP: A Visual Difference Predictor for Image, Video, and Display Distortions”

Conference:

Type(s):

Title:

- ColorVideoVDP: A Visual Difference Predictor for Image, Video, and Display Distortions

Presenter(s)/Author(s):

Abstract:

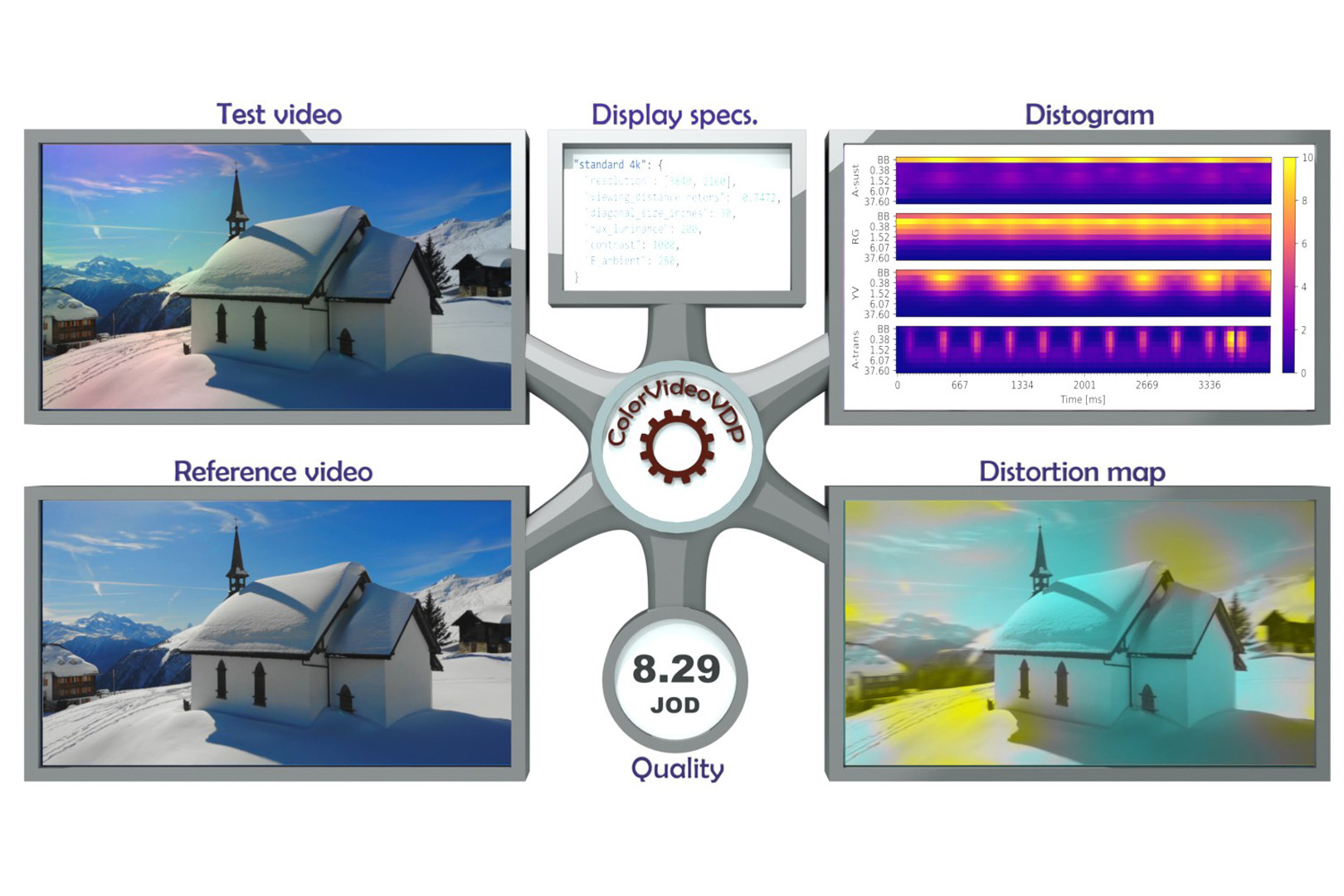

ColorVideoVDP is a differentiable image and video quality metric that models human color and spatiotemporal vision. It is targeted and calibrated to assess image distortions due to AR/VR display technologies and video streaming, and it can handle both SDR and HDR content.

References:

[1]

M. M. Alam, K. P. Vilankar, David J Field, and Damon M Chandler. 2014. Local masking in natural images: A database and analysis. Journal of Vision 14, 8 (July 2014), 22–22. Citation Key: Alam2014.

[2]

Stephen J. Anderson and David C. Burr. 1985. Spatial and temporal selectivity of the human motion detection system. Vision Research 25, 8 (jan 1985), 1147–1154.

[3]

Pontus Andersson, Jim Nilsson, Tomas Akenine-M?ller, Magnus Oskarsson, Kalle ?str?m, and Mark D. Fairchild. 2020. FLIP: A Difference Evaluator for Alternating Images. Proc. of the ACM on Computer Graphics and Interactive Techniques 3, 2 (aug 2020), 1–23.

[4]

Pontus Andersson, Jim Nilsson, Peter Shirley, and Tomas Akenine-M?ller. 2021. Visualizing Errors in Rendered High Dynamic Range Images. In Eurographics Short Papers.

[5]

Yuta Asano and Mark D Fairchild. 2020. Categorical observers for metamerism. Color Research & Application 45, 4 (2020), 576–585.

[6]

Yuta Asano, Mark D Fairchild, and Laurent Blond?. 2016. Individual colorimetric observer model. PloS one 11, 2 (2016), e0145671.

[7]

Maliha Ashraf, Rafa? K. Mantiuk, Alexandre Chapiro, and Sophie Wuerger. 2024. castleCSF — A Contrast Sensitivity Function of Color, Area, Spatio-Temporal frequency, Luminance and Eccentricity. Journal of Vision 24 (2024), 5.

[8]

Ben Bodner, Neil Robinson, Robin Atkins, and Scott Daly. 2018. 78-1: Correcting Metameric Failure of Wide Color Gamut Displays. In SID Symposium Digest of Technical Papers, Vol. 49. Wiley Online Library, 1040–1043.

[9]

P. Burt and E. Adelson. 1983. The Laplacian Pyramid as a Compact Image Code. IEEE Transactions on Communications 31, 4 (apr 1983), 532–540.

[10]

John Cass, C. W. G. Clifford, David Alais, and Branka Spehar. 2009. Temporal structure of chromatic channels revealed through masking. Journal of Vision 9, 5 (may 2009), 17–17.

[11]

Tianqi Chen, Bing Xu, Chiyuan Zhang, and Carlos Guestrin. 2016. Training deep nets with sublinear memory cost. arXiv preprint arXiv:1604.06174 (2016).

[12]

Manri Cheon, Sung-Jun Yoon, Byungyeon Kang, and Junwoo Lee. 2021. Perceptual Image Quality Assessment with Transformers. In 2021 IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (CVPRW). IEEE, Nashville, TN, USA, 433–442.

[13]

Anustup Choudhury, Robert Wanat, Jaclyn Pytlarz, and Scott Daly. 2021. Image quality evaluation for high dynamic range and wide color gamut applications using visual spatial processing of color differences. Color Research & Application 46, 1 (feb 2021), 46–64.

[14]

CIE. 1993. Parametric effects in colour-difference evaluation. Technical Report. CIE 101-1993.

[15]

CIE. 2018. CIE 015: 2018 Colorimetry. (2018).

[16]

S.J. Daly. 1993. Visible differences predictor: an algorithm for the assessment of image fidelity. In Digital Images and Human Vision, Andrew B. Watson (Ed.). Vol. 1666. MIT Press, 179–206.

[17]

R.L. De Valois, D.G. Albrecht, and L.G. Thorell. 1982. Spatial frequency selectivity of cells in macaque visual cortex. Vision Research 22, 5 (1982), 545–559.

[18]

Gyorgy Denes, Akshay Jindal, Aliaksei Mikhailiuk, and Rafa? K. Mantiuk. 2020. A perceptual model of motion quality for rendering with adaptive refresh-rate and resolution. ACM Transactions on Graphics 39, 4 (jul 2020), 133.

[19]

A M Derrington, J Krauskopf, and P Lennie. 1984. Chromatic mechanisms in lateral geniculate nucleus of macaque. The Journal of Physiology 357, 1 (dec 1984), 241–265.

[20]

Piotr Didyk, Tobias Ritschel, Elmar Eisemann, Karol Myszkowski, and Hans-peter Seidel. 2011. A perceptual model for disparity. ACM Transactions on Graphics 30, 4 (jul 2011), 1.

[21]

Keyan Ding, Kede Ma, Shiqi Wang, and Eero P. Simoncelli. 2021. Comparison of Full-Reference Image Quality Models for Optimization of Image Processing Systems. International Journal of Computer Vision 129, 4 (apr 2021), 1258–1281. arXiv:2005.01338

[22]

John M. Foley. 1994. Human luminance pattern-vision mechanisms: masking experiments require a new model. Journal of the Optical Society of America A 11, 6 (jun 1994), 1710.

[23]

LeGrand H Hardy, Gertrude Rand, and M Catherine Rittler. 1945. Tests for the detection and analysis of color-blindness. I. The Ishihara test: An evaluation. JOSA 35, 4 (1945), 268–275.

[24]

R.F. Hess and R.J. Snowden. 1992. Temporal properties of human visual filters: number, shapes and spatial covariation. Vision Research 32, 1 (jan 1992), 47–59.

[25]

Po-Chieh Hung. 2019. 61-3: Invited paper: CIE activities on wide colour gamut and high dynamic range imaging. In SID Symposium Digest of Technical Papers, Vol. 50. Wiley Online Library, 866–869.

[26]

ITU-R BT. 2124. 2019. Objective metric for the assessment of the potential visibility of colour differences in television. Technical Report.

[27]

Justin Johnson, Alexandre Alahi, and Li Fei-Fei. 2016. Perceptual Losses for Real-Time Style Transfer and Super-Resolution. Lecture Notes in Computer Science, Vol. 9906. Springer International Publishing, Cham, 694–711.

[28]

Minjung Kim, Maryam Azimi, and Rafa? K. Mantiuk. 2021. Color Threshold Functions: Application of Contrast Sensitivity Functions in Standard and High Dynamic Range Color Spaces. Electronic Imaging 33, 11 (jan 2021), 153-1–153-7.

[29]

Justin Laird, Mitchell Rosen, Jeff Pelz, Ethan Montag, and Scott Daly. 2006. Spatiovelocity CSF as a function of retinal velocity using unstabilized stimuli. In SPIE 6057, Human Vision and Electronic Imaging XI.

[30]

Valero Laparra, Johannes Ball?, Alexander Berardino, and Eero P Simoncelli. 2016. Perceptual image quality assessment using a normalized Laplacian pyramid. Electronic Imaging 28, 16 (2016), 1–6.

[31]

Zhi Li, Anne Aaron, Ioannis Katsavounidis, Anush Moorthy, and Megha Manohara. 2016a. Toward A Practical Perceptual Video Quality Metric. Technical Report. The NETFLIX Tech Blog. https://netflixtechblog.com/toward-a-practical-perceptual-video-quality-metric-653f208b9652

[32]

Zhi Li, Anne Aaron, Ioannis Katsavounidis, Anush Moorthy, and Megha Manohara. 2016b. Toward A Practical Perceptual Video Quality Metric. https://netflixtechblog.com/toward-a-practical-perceptual-video-quality-metric-653f208b9652

[33]

Hanhe Lin, Vlad Hosu, and Dietmar Saupe. 2019. KADID-10k: A Large-scale Artificially Distorted IQA Database. In 2019 Eleventh International Conference on Quality of Multimedia Experience (QoMEX), Vol. 161. IEEE, 1–3.

[34]

Rafa? K. Mantiuk, Maliha Ashraf, and Alexandre Chapiro. 2022. StelaCSF: A Unified Model of Contrast Sensitivity as the Function of Spatio-Temporal Frequency, Eccentricity, Luminance and Area. ACM Trans. Graph. 41, 4, Article 145 (Jul 2022), 16 pages.

[35]

Rafal K. Mantiuk and Maryam Azimi. 2021. PU21: A novel perceptually uniform encoding for adapting existing quality metrics for HDR. In 2021 Picture Coding Symposium (PCS). IEEE, 1–5.

[36]

Rafa? K. Mantiuk, Gyorgy Denes, Alexandre Chapiro, Anton Kaplanyan, Gizem Rufo, Romain Bachy, Trisha Lian, and Anjul Patney. 2021. FovVideoVDP : A visible difference predictor for wide field-of-view video. ACM Transaction on Graphics 40, 4 (2021), 49.

[37]

Rafal K. Mantiuk, Dounia Hammou, and Param Hanji. 2023. HDR-VDP-3: A multi-metric for predicting image differences, quality and contrast distortions in high dynamic range and regular content. (apr 2023). arXiv:2304.13625 http://arxiv.org/abs/2304.13625

[38]

Rafa? K. Mantiuk, Kil Joong Kim, Allan G. Rempel, and Wolfgang Heidrich. 2011. HDR-VDP-2: A calibrated visual metric for visibility and quality predictions in all luminance conditions. ACM Transactions on Graphics 30, 4 (July 2011), 1–14.

[39]

D.J McKeefry, I.J Murray, and J.J Kulikowski. 2001. Red-green and blue-yellow mechanisms are matched in sensitivity for temporal and spatial modulation. Vision Research 41, 2 (jan 2001), 245–255.

[40]

Aliaksei Mikhailiuk, Maria Perez-Ortiz, Dingcheng Yue, Wilson Suen, and Rafal Mantiuk. 2022. Consolidated Dataset and Metrics for High-Dynamic-Range Image Quality. IEEE Transactions on Multimedia 24 (2022), 2125–2138.

[41]

Aliaksei Mikhailiuk, Clifford Wilmot, Maria Perez-Ortiz, Dingcheng Yue, and Rafa? K Mantiuk. 2021. Active sampling for pairwise comparisons via approximate message passing and information gain maximization. In 2020 25th International Conference on Pattern Recognition (ICPR). IEEE, 2559–2566.

[42]

Aamir Mustafa, Aliaksei Mikhailiuk, Dan Andrei Iliescu, Varun Babbar, and Rafal K. Mantiuk. 2022. Training a Task-Specific Image Reconstruction Loss. In 2022 IEEE/CVF Winter Conference on Applications of Computer Vision (WACV). IEEE, Waikoloa, HI, USA, 21–30.

[43]

Chun Wei Ooi and John Dingliana. 2022. Color LightField: Estimation Of View-point Dependant Color Dispersion In Waveguide Display. In SIGGRAPH Asia Posters. 1–2.

[44]

Eli Peli. 1990. Contrast in complex images. Journal of the Optical Society of America A 7, 10 (oct 1990), 2032–40.

[45]

Eli Peli. 1995. Suprathreshold contrast perception across differences in mean luminance: effects of stimulus size, dichoptic presentation, and length of adaptation. JOSA A 12, 5 (1995), 817.

[46]

Maria Perez-Ortiz, Aliaksei Mikhailiuk, Emin Zerman, Vedad Hulusic, Giuseppe Valenzise, and Rafa? K Mantiuk. 2019. From pairwise comparisons and rating to a unified quality scale. IEEE Transactions on Image Processing 29 (2019), 1139–1151.

[47]

Ekta Prashnani, Hong Cai, Yasamin Mostofi, and Pradeep Sen. 2018. PieAPP: Perceptual Image-Error Assessment Through Pairwise Preference. In 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition. IEEE, 1808–1817.

[48]

R. Frank Quick, W. W. Mullins, and T. A. Reichert. 1978. Spatial summation effects on two-component grating thresholds. Journal of the Optical Society of America 68, 1 (jan 1978), 116.

[49]

Immo Schuetz and Katja Fiehler. 2022. Eye tracking in virtual reality: Vive pro eye spatial accuracy, precision, and calibration reliability. Journal of Eye Movement Research 15, 3 (2022).

[50]

Kalpana Seshadrinathan, Rajiv Soundararajan, Alan Conrad Bovik, and Lawrence K. Cormack. 2010. Study of Subjective and Objective Quality Assessment of Video. IEEE Transactions on Image Processing 19, 6 (jun 2010), 1427–1441.

[51]

Zaixi Shang, Joshua P. Ebenezer, Alan C. Bovik, Yongjun Wu, Hai Wei, and Sriram Sethuraman. 2022. Subjective Assessment Of High Dynamic Range Videos Under Different Ambient Conditions. In 2022 IEEE International Conference on Image Processing (ICIP). IEEE, 786–790.

[52]

Gaurav Sharma, Wencheng Wu, and Edul N. Dalal. 2005. The CIEDE2000 color-difference formula: Implementation notes, supplementary test data, and mathematical observations. Color Research & Application 30, 1 (feb 2005), 21–30.

[53]

Rajiv Soundararajan and Alan C Bovik. 2012. Video quality assessment by reduced reference spatio-temporal entropic differencing. IEEE Transactions on Circuits and Systems for Video Technology 23, 4 (2012), 684–694.

[54]

Andrew Stockman and David H. Brainard. 2010. Color vision mechanisms. In OSA handbook of optics. 11.

[55]

C. F. Stromeyer and B. Julesz. 1972. Spatial-Frequency Masking in Vision: Critical Bands and Spread of Masking. Journal of the Optical Society of America 62, 10 (oct 1972), 1221.

[56]

Eugene Switkes, Arthur Bradley, and Karen K. De Valois. 1988. Contrast dependence and mechanisms of masking interactions among chromatic and luminance gratings. Journal of the Optical Society of America A 5, 7 (1988), 1149.

[57]

Eugene Switkes and Michael A. Crognale. 1999. Comparison of color and luminance contrast: apples versus oranges? Vision Research 39, 10 (may 1999), 1823–1831.

[58]

Abhinau K. Venkataramanan, Cosmin Stejerean, and Alan C. Bovik. 2022. Funque: Fusion of Unified Quality Evaluators. In 2022 IEEE International Conference on Image Processing (ICIP). IEEE, Bordeaux, France, 2147–2151.

[59]

Abhinau K. Venkataramanan, Cosmin Stejerean, Ioannis Katsavounidis, and Alan C. Bovik. 2023. One Transform To Compute Them All: Efficient Fusion-Based Full-Reference Video Quality Assessment. arXiv:2304.03412 (Nov. 2023). http://arxiv.org/abs/2304.03412 arXiv:2304.03412 [eess].

[60]

Z Wang, E.P. Simoncelli, and A.C. Bovik. 2003. Multiscale structural similarity for image quality assessment. In The Thrity-Seventh Asilomar Conference on Signals, Systems & Computers, 2003. IEEE, 1398–1402.

[61]

AB Watson and JA Solomon. 1997. Model of visual contrast gain control and pattern masking. Journal of the Optical Society of America A 14, 9 (1997), 2379–2391. http://www.opticsinfobase.org/abstract.cfm?URI=josaa-14-9-2379

[62]

Andrew B Watson. 1993. DCTune: a technique for visual optimization of DCT quantization matrices for individual images. In Society for Information Display Digest of Technical Papers XXIV. 946–949.

[63]

Andrew B. Watson, H. B. Barlow, and John G. Robson. 1983. What does the eye see best? Nature 302, 5907 (mar 1983), 419–422.

[64]

Sophie Wuerger, Maliha Ashraf, Minjung Kim, Jasna Martinovic, Mar?a P?rez-Ortiz, and Rafa? K. Mantiuk. 2020. Spatio-chromatic contrast sensitivity under mesopic and photopic light levels. Journal of Vision 20, 4 (April 2020), 23.

[65]

Nanyang Ye, Krzysztof Wolski, and Rafal K. Mantiuk. 2019. Predicting Visible Image Differences Under Varying Display Brightness and Viewing Distance. In 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 5429–5437.

[66]

Emin Zerman, Vedad Hulusic, Giuseppe Valenzise, Rafa? K Mantiuk, and Fr?d?ric Dufaux. 2018. The relation between MOS and pairwise comparisons and the importance of cross-content comparisons. Electronic Imaging 2018, 14 (2018), 1–6.

[67]

Lin Zhang, Ying Shen, and Hongyu Li. 2014. VSI: A Visual Saliency-Induced Index for Perceptual Image Quality Assessment. IEEE Transactions on Image Processing 23, 10 (oct 2014), 4270–4281.

[68]

Lin Zhang, Lei Zhang, Xuanqin Mou, and David Zhang. 2011. FSIM: A Feature Similarity Index for Image Quality Assessment. IEEE Transactions on Image Processing 20, 8 (aug 2011), 2378–2386.

[69]

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The Unreasonable Effectiveness of Deep Features as a Perceptual Metric. In CVPR. 586–595.

[70]

X. Zhang and B. A. Wandell. 1997. A spatial extension of CIELAB for digital color-image reproduction. Journal of the Society for Information Display 5, 1 (1997), 61.