“NeuralTO: Neural Reconstruction and View Synthesis of Translucent Objects”

Conference:

Type(s):

Title:

- NeuralTO: Neural Reconstruction and View Synthesis of Translucent Objects

Presenter(s)/Author(s):

Abstract:

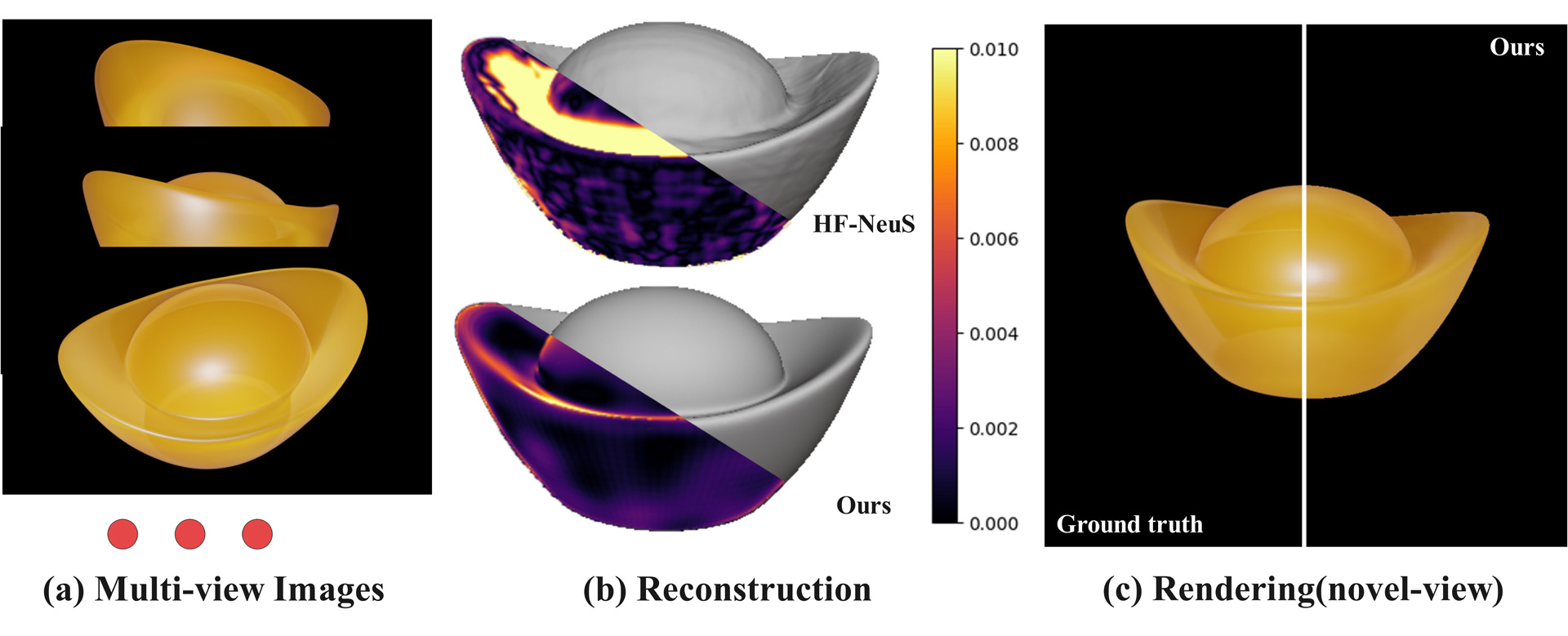

We introduce a novel, two-stages framework, which is geared toward high-fidelity surface reconstruction and the novel-view synthesis of translucent objects. In our framework, we propose a theoretical model for the neural radiance field of translucent objects, which parametrizes the density field using a constant extinction coefficient.

References:

[1]

Jonathan T Barron, Ben Mildenhall, Matthew Tancik, Peter Hedman, Ricardo Martin-Brualla, and Pratul P Srinivasan. 2021. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 5855–5864.

[2]

Mojtaba Bemana, Karol Myszkowski, Jeppe Revall Frisvad, Hans-Peter Seidel, and Tobias Ritschel. 2022. Eikonal fields for refractive novel-view synthesis. In ACM SIGGRAPH 2022 Conference Proceedings. 1–9.

[3]

Brent Burley. 2015. Extending the Disney BRDF to a BSDF with integrated subsurface scattering. SIGGRAPH Course: Physically Based Shading in Theory and Practice. ACM, New York, NY 19, 7 (2015), 9.

[4]

Eva Cerezo, Frederic P?rez, Xavier Pueyo, Francisco J Seron, and Fran?ois X Sillion. 2005. A survey on participating media rendering techniques. The Visual Computer 21 (2005), 303–328.

[5]

Anpei Chen, Zexiang Xu, Andreas Geiger, Jingyi Yu, and Hao Su. 2022. Tensorf: Tensorial radiance fields. In European Conference on Computer Vision. Springer, 333–350.

[6]

Zhang Chen, Zhong Li, Liangchen Song, Lele Chen, Jingyi Yu, Junsong Yuan, and Yi Xu. 2023. Neurbf: A neural fields representation with adaptive radial basis functions. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 4182–4194.

[7]

Zhiqin Chen and Hao Zhang. 2019. Learning implicit fields for generative shape modeling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5939–5948.

[8]

Xi Deng, Fujun Luan, Bruce Walter, Kavita Bala, and Steve Marschner. 2022. Reconstructing translucent objects using differentiable rendering. In ACM SIGGRAPH 2022 Conference Proceedings. 1–10.

[9]

Valentin Deschaintre, Miika Aittala, Fredo Durand, George Drettakis, and Adrien Bousseau. 2018. Single-image svbrdf capture with a rendering-aware deep network. ACM Transactions on Graphics (ToG) 37, 4 (2018), 1–15.

[10]

Yue Fan, Ivan Skorokhodov, Oleg Voynov, Savva Ignatyev, Evgeny Burnaev, Peter Wonka, and Yiqun Wang. 2023. Factored-NeuS: Reconstructing Surfaces, Illumination, and Materials of Possibly Glossy Objects. arXiv preprint arXiv:2305.17929 (2023).

[11]

Sara Fridovich-Keil, Alex Yu, Matthew Tancik, Qinhong Chen, Benjamin Recht, and Angjoo Kanazawa. 2022. Plenoxels: Radiance fields without neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5501–5510.

[12]

Qiancheng Fu, Qingshan Xu, Yew Soon Ong, and Wenbing Tao. 2022. Geo-neus: Geometry-consistent neural implicit surfaces learning for multi-view reconstruction. Advances in Neural Information Processing Systems 35 (2022), 3403–3416.

[13]

Fangzhou Gao, Lianghao Zhang, Li Wang, Jiamin Cheng, and Jiawan Zhang. 2023. Transparent Object Reconstruction via Implicit Differentiable Refraction Rendering. In SIGGRAPH Asia 2023 Conference Papers. 1–11.

[14]

Kyle Gao, Yina Gao, Hongjie He, Dening Lu, Linlin Xu, and Jonathan Li. 2022. Nerf: Neural radiance field in 3d vision, a comprehensive review. arXiv preprint arXiv:2210.00379 (2022).

[15]

Wenhang Ge, Tao Hu, Haoyu Zhao, Shu Liu, and Ying-Cong Chen. 2023. Ref-NeuS: Ambiguity-Reduced Neural Implicit Surface Learning for Multi-View Reconstruction with Reflection. arXiv preprint arXiv:2303.10840 (2023).

[16]

Amos Gropp, Lior Yariv, Niv Haim, Matan Atzmon, and Yaron Lipman. 2020. Implicit Geometric Regularization for Learning Shapes. In International Conference on Machine Learning. PMLR, 3789–3799.

[17]

Yuan-Chen Guo, Di Kang, Linchao Bao, Yu He, and Song-Hai Zhang. 2022. Nerfren: Neural radiance fields with reflections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 18409–18418.

[18]

Eric Heitz, Jonathan Dupuy, Cyril Crassin, and Carsten Dachsbacher. 2015. The SGGX microflake distribution. ACM Transactions on Graphics (TOG) 34, 4 (2015), 1–11.

[19]

Jinkai Hu, Chengzhong Yu, Hongli Liu, Lingqi Yan, Yiqian Wu, and Xiaogang Jin. 2023. Deep real-time volumetric rendering using multi-feature fusion. In ACM SIGGRAPH 2023 Conference Proceedings. 1–10.

[20]

Ivo Ihrke, Gernot Ziegler, Art Tevs, Christian Theobalt, Marcus Magnor, and Hans-Peter Seidel. 2007. Eikonal rendering: Efficient light transport in refractive objects. ACM Transactions on Graphics (TOG) 26, 3 (2007), 59–es.

[21]

Rasmus Jensen, Anders Dahl, George Vogiatzis, Engin Tola, and Henrik Aan?s. 2014. Large scale multi-view stereopsis evaluation. In Proceedings of the IEEE conference on computer vision and pattern recognition. 406–413.

[22]

Simon Kallweit, Thomas M?ller, Brian Mcwilliams, Markus Gross, and Jan Nov?k. 2017. Deep scattering: Rendering atmospheric clouds with radiance-predicting neural networks. ACM Transactions on Graphics (TOG) 36, 6 (2017), 1–11.

[23]

Berk Kaya, Suryansh Kumar, Francesco Sarno, Vittorio Ferrari, and Luc Van Gool. 2022. Neural radiance fields approach to deep multi-view photometric stereo. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision. 1965–1977.

[24]

Diederik P Kingma and Jimmy Ba. 2014. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

[25]

Chenhao Li, Trung Thanh Ngo, and Hajime Nagahara. 2023c. Inverse Rendering of Translucent Objects using Physical and Neural Renderers. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12510–12520.

[26]

Jiyang Li, Lechao Cheng, Jingxuan He, and Zhangye Wang. 2023a. Current Status and Prospects of Research on Neural Radiance Fields. Journal of Computer-Aided Design and Computer Graphics (2023).

[27]

Zongcheng Li, Xiaoxiao Long, Yusen Wang, Tuo Cao, Wenping Wang, Fei Luo, and Chunxia Xiao. 2023b. NeTO: Neural Reconstruction of Transparent Objects with Self-Occlusion Aware Refraction-Tracing. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 18547–18557.

[28]

Zhengqin Li, Mohammad Shafiei, Ravi Ramamoorthi, Kalyan Sunkavalli, and Manmohan Chandraker. 2020. Inverse rendering for complex indoor scenes: Shape, spatially-varying lighting and svbrdf from a single image. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2475–2484.

[29]

Zhong Li, Liangchen Song, Zhang Chen, Xiangyu Du, Lele Chen, Junsong Yuan, and Yi Xu. 2023d. Relit-NeuLF: Efficient Relighting and Novel View Synthesis via Neural 4D Light Field. In Proceedings of the 31st ACM International Conference on Multimedia. 7007–7016.

[30]

Zhong Li, Liangchen Song, Celong Liu, Junsong Yuan, and Yi Xu. 2021. Neulf: Efficient novel view synthesis with neural 4d light field. arXiv preprint arXiv:2105.07112 (2021).

[31]

Arvin Lin, Yiming Lin, and Abhijeet Ghosh. 2023. Practical Acquisition of Shape and Plausible Appearance of Reflective and Translucent Objects. In Computer Graphics Forum, Vol. 42. Wiley Online Library, e14889.

[32]

Yuan Liu, Peng Wang, Cheng Lin, Xiaoxiao Long, Jiepeng Wang, Lingjie Liu, Taku Komura, and Wenping Wang. 2023. NeRO: Neural Geometry and BRDF Reconstruction of Reflective Objects from Multiview Images. arXiv preprint arXiv:2305.17398 (2023).

[33]

Jiahui Lyu, Bojian Wu, Dani Lischinski, Daniel Cohen-Or, and Hui Huang. 2020. Differentiable refraction-tracing for mesh reconstruction of transparent objects. ACM Transactions on Graphics (TOG) 39, 6 (2020), 1–13.

[34]

Linjie Lyu, Ayush Tewari, Thomas Leimk?hler, Marc Habermann, and Christian Theobalt. 2022. Neural radiance transfer fields for relightable novel-view synthesis with global illumination. In European Conference on Computer Vision. Springer, 153–169.

[35]

Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. 2021. Nerf: Representing scenes as neural radiance fields for view synthesis. Commun. ACM 65, 1 (2021), 99–106.

[36]

Tai-Jiang Mu, Hao-Xiang Chen, Jun-Xiong Cai, and Ning Guo. 2023. Neural 3D reconstruction from sparse views using geometric priors. Computational Visual Media 9, 4 (2023), 687–697.

[37]

Thomas M?ller, Alex Evans, Christoph Schied, and Alexander Keller. 2022. Instant neural graphics primitives with a multiresolution hash encoding. ACM Transactions on Graphics (ToG) 41, 4 (2022), 1–15.

[38]

Michael Niemeyer, Lars Mescheder, Michael Oechsle, and Andreas Geiger. 2020. Differentiable volumetric rendering: Learning implicit 3d representations without 3d supervision. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 3504–3515.

[39]

Michael Oechsle, Songyou Peng, and Andreas Geiger. 2021. Unisurf: Unifying neural implicit surfaces and radiance fields for multi-view reconstruction. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 5589–5599.

[40]

Jiaxiong Qiu, Peng-Tao Jiang, Yifan Zhu, Ze-Xin Yin, Ming-Ming Cheng, and Bo Ren. 2023. Looking Through the Glass: Neural Surface Reconstruction Against High Specular Reflections. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 20823–20833.

[41]

Johannes L Schonberger and Jan-Michael Frahm. 2016. Structure-from-motion revisited. In Proceedings of the IEEE conference on computer vision and pattern recognition. 4104–4113.

[42]

Zeqi Shi, Xiangyu Lin, and Ying Song. 2023. An attention-embedded GAN for SVBRDF recovery from a single image. Computational Visual Media 9, 3 (2023), 551–561.

[43]

Dor Verbin, Peter Hedman, Ben Mildenhall, Todd Zickler, Jonathan T Barron, and Pratul P Srinivasan. 2022. Ref-nerf: Structured view-dependent appearance for neural radiance fields. In 2022 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE, 5481–5490.

[44]

Jiaping Wang, Shuang Zhao, Xin Tong, Stephen Lin, Zhouchen Lin, Yue Dong, Baining Guo, and Heung-Yeung Shum. 2008. Modeling and rendering of heterogeneous translucent materials using the diffusion equation. ACM Transactions on Graphics (TOG) 27, 1 (2008), 1–18.

[45]

Peng Wang, Lingjie Liu, Yuan Liu, Christian Theobalt, Taku Komura, and Wenping Wang. 2021. NeuS: Learning Neural Implicit Surfaces by Volume Rendering for Multi-view Reconstruction. Advances in Neural Information Processing Systems 34 (2021), 27171–27183.

[46]

Yiqun Wang, Ivan Skorokhodov, and Peter Wonka. 2022. Hf-neus: Improved surface reconstruction using high-frequency details. Advances in Neural Information Processing Systems 35 (2022), 1966–1978.

[47]

Zongji Wang, Yunfei Liu, and Feng Lu. 2023. Discriminative feature encoding for intrinsic image decomposition. Computational Visual Media 9, 3 (2023), 597–618.

[48]

Haoyu Wu, Alexandros Graikos, and Dimitris Samaras. 2023. S-VolSDF: Sparse MultiView Stereo Regularization of Neural Implicit Surfaces. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 3556–3568.

[49]

Jingjie Yang and Shuangjiu Xiao. 2016. An inverse rendering approach for heterogeneous translucent materials. In Proceedings of the 15th ACM SIGGRAPH Conference on Virtual-Reality Continuum and Its Applications in Industry-Volume 1. 79–88.

[50]

Wenqi Yang, Guanying Chen, Chaofeng Chen, Zhenfang Chen, and Kwan-Yee K Wong. 2022. Ps-nerf: Neural inverse rendering for multi-view photometric stereo. In European Conference on Computer Vision. Springer, 266–284.

[51]

Yao Yao, Zixin Luo, Shiwei Li, Jingyang Zhang, Yufan Ren, Lei Zhou, Tian Fang, and Long Quan. 2020. Blendedmvs: A large-scale dataset for generalized multi-view stereo networks. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. 1790–1799.

[52]

Yao Yao, Jingyang Zhang, Jingbo Liu, Yihang Qu, Tian Fang, David McKinnon, Yanghai Tsin, and Long Quan. 2022. Neilf: Neural incident light field for physically-based material estimation. In European Conference on Computer Vision. Springer, 700–716.

[53]

Lior Yariv, Jiatao Gu, Yoni Kasten, and Yaron Lipman. 2021. Volume rendering of neural implicit surfaces. Advances in Neural Information Processing Systems 34 (2021), 4805–4815.

[54]

Lior Yariv, Peter Hedman, Christian Reiser, Dor Verbin, Pratul P Srinivasan, Richard Szeliski, Jonathan T Barron, and Ben Mildenhall. 2023. BakedSDF: Meshing Neural SDFs for Real-Time View Synthesis. arXiv preprint arXiv:2302.14859 (2023).

[55]

Lior Yariv, Yoni Kasten, Dror Moran, Meirav Galun, Matan Atzmon, Ronen Basri, and Yaron Lipman. 2020. Multiview neural surface reconstruction with implicit lighting and material. Adv. Neural Inform. Process. Syst 1, 2 (2020), 3.

[56]

Hong-Xing Yu, Michelle Guo, Alireza Fathi, Yen-Yu Chang, Eric Ryan Chan, Ruohan Gao, Thomas Funkhouser, and Jiajun Wu. 2023. Learning object-centric neural scattering functions for free-viewpoint relighting and scene composition. arXiv preprint arXiv:2303.06138 (2023).

[57]

Junyi Zeng, Chong Bao, Rui Chen, Zilong Dong, Guofeng Zhang, Hujun Bao, and Zhaopeng Cui. 2023. Mirror-NeRF: Learning Neural Radiance Fields for Mirrors with Whitted-Style Ray Tracing. In Proceedings of the 31st ACM International Conference on Multimedia. 4606–4615.

[58]

Jason Zhang, Gengshan Yang, Shubham Tulsiani, and Deva Ramanan. 2021c. Ners: Neural reflectance surfaces for sparse-view 3d reconstruction in the wild. Advances in Neural Information Processing Systems 34 (2021), 29835–29847.

[59]

Jingyang Zhang, Yao Yao, Shiwei Li, Jingbo Liu, Tian Fang, David McKinnon, Yanghai Tsin, and Long Quan. 2023b. NeILF++: Inter-Reflectable Light Fields for Geometry and Material Estimation. arXiv preprint arXiv:2303.17147 (2023).

[60]

Kai Zhang, Fujun Luan, Zhengqi Li, and Noah Snavely. 2022. Iron: Inverse rendering by optimizing neural sdfs and materials from photometric images. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5565–5574.

[61]

Kai Zhang, Fujun Luan, Qianqian Wang, Kavita Bala, and Noah Snavely. 2021a. Physg: Inverse rendering with spherical gaussians for physics-based material editing and relighting. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 5453–5462.

[62]

Richard Zhang, Phillip Isola, Alexei A Efros, Eli Shechtman, and Oliver Wang. 2018. The unreasonable effectiveness of deep features as a perceptual metric. In Proceedings of the IEEE conference on computer vision and pattern recognition. 586–595.

[63]

Xiuming Zhang, Pratul P Srinivasan, Boyang Deng, Paul Debevec, William T Freeman, and Jonathan T Barron. 2021b. Nerfactor: Neural factorization of shape and reflectance under an unknown illumination. ACM Transactions on Graphics (ToG) 40, 6 (2021), 1–18.

[64]

Youjia Zhang, Teng Xu, Junqing Yu, Yuteng Ye, Yanqing Jing, Junle Wang, Jingyi Yu, and Wei Yang. 2023a. Nemf: Inverse volume rendering with neural microflake field. In Proceedings of the IEEE/CVF International Conference on Computer Vision. 22919–22929.

[65]

Quan Zheng, Gurprit Singh, and Hans-Peter Seidel. 2021. Neural relightable participating media rendering. Advances in Neural Information Processing Systems 34 (2021), 15203–15215.

[66]

Rui Zhu, Zhengqin Li, Janarbek Matai, Fatih Porikli, and Manmohan Chandraker. 2022. Irisformer: Dense vision transformers for single-image inverse rendering in indoor scenes. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 2822–2831.

[67]

Shizhan Zhu, Shunsuke Saito, Aljaz Bozic, Carlos Aliaga, Trevor Darrell, and Christop Lassner. 2023. Neural Relighting with Subsurface Scattering by Learning the Radiance Transfer Gradient. arXiv preprint arXiv:2306.09322 (2023).