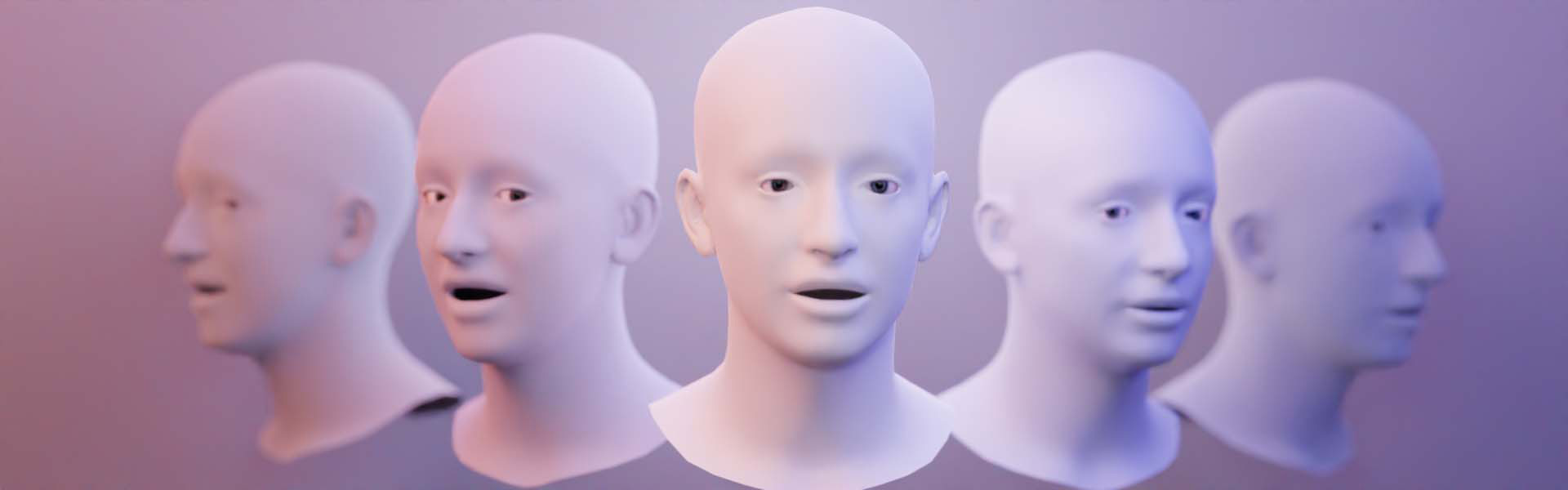

“A Multi-modal Framework for 3D Facial Animation Control” by Cao, Xiao and Shi

Conference:

Type(s):

Title:

- A Multi-modal Framework for 3D Facial Animation Control

Session/Category Title: Animation & Simulation

Presenter(s)/Author(s):

Abstract:

We present a multi-model framework for 3D facial animation control that uses video and audio input to drive the model with various geometry and rigging information. Such information is easy to obtain in practice and robust in animation control. We conduct user studies to evaluate natualness and accuracy.

References:

[1]

Joon Son Chung, Arsha Nagrani, and Andrew Zisserman. 2018. Voxceleb2: Deep speaker recognition. arXiv preprint arXiv:1806.05622 (2018).

[2]

Yao Feng, Haiwen Feng, Michael J. Black, and Timo Bolkart. 2021. Learning an animatable detailed 3D face model from in-the-wild images. ACM Transactions on Graphics (Aug 2021), 1?13. https://doi.org/10.1145/3450626.3459936

[3]

Tianye Li, Timo Bolkart, Michael J. Black, Hao Li, and Javier Romero. 2017. Learning a model of facial shape and expression from 4D scans. ACM Transactions on Graphics (Dec 2017), 1?17. https://doi.org/10.1145/3130800.3130813

[4]

Jinbo Xing, Menghan Xia, Yuechen Zhang, Xiaodong Cun, Jue Wang, and Tien-Tsin Wong. 2023. CodeTalker: Speech-Driven 3D Facial Animation with Discrete Motion Prior. 2023 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2023), 12780?12790.

[5]

Xucong Zhang, Yusuke Sugano, Mario Fritz, and Andreas Bulling. 2015. Appearance-based gaze estimation in the wild. 2015 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2015), 4511?4520.